What is the SSIS 469 error and why you should care

First off: “SSIS 469” isn’t a neatly documented error code from Microsoft. It’s more of a community nickname for a class of data‐flow and validation errors that show up in SSIS packages.

These failures typically happen when your data‐flow task hits something unexpected—schema changes, data type mismatches, connection failures, large buffer overloads. Because the code itself isn’t formally recognised, it can feel vague and frustrating.

Why it matters:

- If your ETL pipeline fails (especially overnight jobs), reports are delayed, data quality suffers.

- Because the “469” label covers multiple underlying causes, you need a systematic approach rather than guessing.

- By understanding how to troubleshoot it effectively, you reduce downtime and make your SSIS packages more robust.

2. Common root causes of the SSIS 469 failure

Here are the most frequent triggers for SSIS 469-style issues, which you’ll want to check first.

a) Schema or metadata changes

One of the most common faults: The source or target schema changed (new column, data type altered, constraints added), but the SSIS package wasn’t updated. When the package executes, the metadata mismatch causes validation to fail.

Example: a VARCHAR column becomes VARCHAR(100) or a NOT NULL constraint is added—your package logic still expects the old definition.

b) Data type mismatches or conversion issues

If your data-flow transformation tries to convert from one type to another (string → int, datetime2 → datetime, etc) and something is out of range or invalid, you’ll hit failures.

Example: the destination column is datetime but your source has a value outside the valid range for datetime.

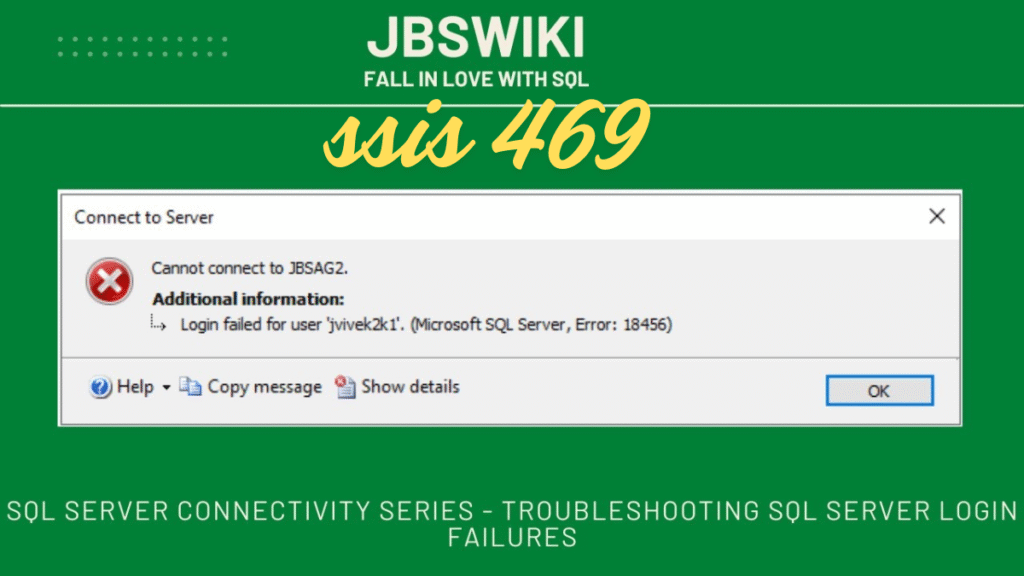

c) Connection / resource failures

Your connection manager might fail (bad credentials, network timeout, database moved) or some external dependency breaks. These failures sometimes surface as “469 type” issues because SSIS aborts the data‐flow early.

d) Buffer/ memory/ performance constraints

Large data volumes, insufficient memory, wrong buffer configuration—these can lead SSIS to fail during data flow. If your DefaultBufferSize or DefaultBufferMaxRows aren’t tuned, you might get mysterious failures.

e) Custom tasks or script components

If you have a Script Task or custom component in your data flow, unhandled exceptions there may bubble up as generic failure codes. Since “469” is used informally, this is a possibility.

3. Step‐by‐step troubleshooting process

When you see SSIS 469 or a generic failure in a Data Flow Task, follow this approach:

Step 1: Capture detailed logging

Before diving in, make sure your package is logging enough detail. Enable logging for the Data Flow Task, and for events like OnError, OnProgress, OnTaskFailed. Having proper error text rather than just “Task failed” gives you a huge head start.

Step 2: Identify the exact failing component

Open your SSIS package in BIDS/SSDT and inspect the Data Flow where it’s failing. Which Transformation or Destination shows failure? If you can reproduce interactively, you might use Data Viewers or debug mode to inspect the row(s) causing the issue.

Step 3: Check metadata and schema alignment

- Inspect your source and destination table schemas: changes in columns, data types, constraints?

- In SSIS, open the component editor and view the Input/Output columns: are all aligned to actual source/target definitions?

- Sometimes you might need to right‐click the Data Flow Task and choose “Validate” or use the menu Project → Validate to force SSIS to re‐check metadata.

This step addresses one of the main causes.

Step 4: Check data types & conversions

- Look at each transformation: Derived Column, Data Conversion, Lookup, etc. Are you converting types correctly?

- For example, if a string is longer than the maximum length of a target, or a date is out of range, you’ll see failures.

- Use Data Conversion explicitly rather than relying on implicit conversions.

This holds especially for larger data flows where junk or unexpected values slip through.

Step 5: Check connection managers & external dependencies

- Test each Connection Manager: open its configuration, Test Connection.

- Are there network issues? Has the database server changed? Credentials expired?

- If you run the package under a SQL Agent job, remember user account and permissions matter.

Step 6: Inspect buffer settings / memory / performance

- In the Data Flow Task properties, review

DefaultBufferSize,DefaultBufferMaxRows. Large values or insufficient memory may cause failure mid‐flow. - If the package processes large volumes or complex transformations, consider breaking it into smaller chunks, staging tables, or using batch processing.

- Monitor memory and system resources while executing to see if server pressure is an issue.

Step 7: If custom script/component is used, debug it

- If a Script Component (C# or VB) is in the data flow, open it, check for unhandled exceptions, logging inside the script.

- Use try‐catch blocks and log meaningful messages inside the script so you don’t just get “failed” but get context.

- Check the version compatibility of the custom component with your SSIS version.

Step 8: Re‐test after applying fix

Once you believe you’ve addressed the root cause, execute the package in the development environment, then in staging, then production. Monitor logs and ensure the error does not recur.

Step 9: Set up monitoring to detect early breaks

- Configure package logging to a table/file to capture failures.

- Use event handlers (OnError, OnTaskFailed) inside the package to send alerts or capture diagnostic info.

- Consider implementing checkpoints or restart capabilities if the flow is long. globedrill.com

4. Prevention and best practices

Much better to reduce chance of SSIS 469 happening than just fix it when it does. Here are proactive steps:

Keep metadata and schema in sync

- Use database change monitoring: If a table changes, re‐validate affected SSIS packages.

- Version your packages and source/target schemas, align changes with ETL updates.

- Avoid manual schema changes without pipeline team notification.

Explicit data conversions and error handling

- Don’t rely on implicit conversions inside SSIS: Use Data Conversion or Derived Column.

- Use error outputs in your data flow: rather than fail whole package, divert bad rows for logging or remediation.

Limit package complexity / modularize

- Keep package logic focused and modular. Very large single data‐flows tend to suffer more from subtle failures.

- Use staging tables for heavy loads, then smaller flows for cleansing/transforming.

Tune buffer and resource settings

- Understand your environment (memory, CPU, I/O). Configure appropriate

DefaultBufferSize,DefaultBufferMaxRows,BufferTempStoragePath. - Avoid setting extremely high buffer sizes without understanding implications.

Build robust logging and alerting

- Use SSIS built‐in logging providers (SQL Server, flat file) and include context (package name, task name, error description, row count).

- Build event handlers inside SSIS for OnError, OnTaskFailed to capture and send alerts.

- Maintain dashboards to monitor failures and performance metrics.

Use version control and deployment pipelines

- Keep your SSIS project under version control (Git or TFS).

- Include validation steps before deployment so changes are reviewed.

- Use automated testing (small sample data) after schema changes.

Train your team on frequent issues

- Make sure your team knows the common root causes (schema drift, data mismatches, connection updates).

- Keep a knowledge base of past failures and resolutions.

5. Real-World Example: Diagnosing SSIS 469 in action

Let’s walk through a hypothetical (but realistic) scenario.

Scenario: A nightly ETL loads customer data from a staging table into a data warehouse. One morning the package fails with “Data Flow Task failed … SSIS 469”. The error log shows: Conversion failed because the value exceeded the range allowed by the destination datetime column.

Diagnosis steps:

- Enable full logging; identify the Data Flow component where it broke.

- Check destination table: the

LastPurchaseDatecolumn is datetime (which supports 1753-01-01 to 9999-12-31). - Check staging table: the source column

LastPurchaseDatewas changed by the source system todatetime2(7)and now contains values earlier than 1753 (say 1500-01‐01). - In SSIS, inspect component: Data Conversion was configured but still allowed the invalid date through (it doesn’t implicitly restrict minimum).

- Fix: Add Derived Column transformation ahead of destination:

ISNULL([LastPurchaseDate]) ? (DT_DBTIMESTAMP)NULL : ( (DT_DBTIMESTAMP2)[LastPurchaseDate] < (DT_DBTIMESTAMP)"1753-01-01" ? (DT_DBTIMESTAMP)"1753-01-01" : (DT_DBTIMESTAMP2)[LastPurchaseDate] ) - Re‐test package. It succeeds.

- Document the schema change, add alerting so next time an upstream system changes datatype you’ll know.

Lessons:

- Schema change alerted root cause.

- Data type and range mismatch caused error.

- Use of Derived Column to normalize values.

- Logging and monitoring reduce next occurrence.

6. Checklist for “Fixing SSIS 469”

Here’s a handy checklist you can follow when you encounter or want to avoid SSIS 469-style errors.

| Step | Action |

|---|---|

| ✅ Logging | Ensure detailed logging is enabled (OnError, OnTaskFailed, Data Flow) |

| ✅ Identify component | Find which Data Flow Task / Transformation failed |

| ✅ Schema check | Compare source/target schema changes; check columns, datatypes, constraints |

| ✅ Metadata check | In SSIS, validate metadata for that component (Input/Output) |

| ✅ Data conversion | Review transformations for explicit type conversion and range checks |

| ✅ Connection check | Test connection managers, credentials, network, permissions |

| ✅ Resource check | Inspect buffer size, memory usage, system resources during run |

| ✅ Custom code check | If using Script Components / custom tasks, inspect source code for exceptions |

| ✅ Monitor & alert | Ensure you have alerts/logs for failures so they’re caught early |

| ✅ Document & version | Document changes, version control your SSIS packages and schema changes |

7. When to escalate beyond the basics

If you’ve gone through the steps above and still stuck, consider these:

- Check for platform issues: some failures may be caused by server resource shortages (disk I/O, memory pressure), or SSIS version/incompatibility.

- Check for system‐wide changes: a DB upgrade, schema redesign, driver update may be behind the issue.

- Check for hidden transformations or bypassed rows: maybe the failure is masked; enabling data viewers or row counts helps.

- Use profiler/tracing: For major issues, capturing SQL Server Profiler or Extended Events may help identify timeouts, deadlocks or hidden constraint violations.

- Consider splitting large packages: If a package is handling enormous data volumes in one flow, consider re‐architecting into staging + incremental processes.

8. Final thoughts

The “SSIS 469 error” label is a bit of shorthand—what you’re really dealing with is a data‐flow or validation failure in an SSIS package. The key is not to fix the error code, but to understand what underlying condition caused the package to fail. Schema drift, data types mismatches, connection failures, buffer issues—they’re all culprits.

By following a systematic process—logging > identifying > checking schema/datatypes > connections > resources—you can diagnose and repair the issue. And by applying prevention practices (monitoring changes, explicit conversions, modular design, proper buffer settings, good logging) you reduce overhead and avoid reoccurrence.

If you’re managing ETL pipelines with SSIS, making your packages resilient to these kinds of issues is critical for reliable operations, smoother data integration and fewer late‐night firefights.